I have already address peak LTE data rates and showed how they’re calculated. But what type of data rates would a user actually experience? This is what really matters from a quality of experience perspective.

A number of factors impact the ultimate capacity offered by a cell site. Two critical factors are interference and network loading. These factors are inter-related: higher network loading, which is a measure of the number of active subscribers, results in greater interference.

Interference determines signal quality and the modulation scheme: more bits can be sent over the air with higher modulation schemes. Low interference is typically achieved close to the cell center where distance to interfering cells is largest while highest interference is present at the cell edge where signal from the serving cell is weakest. Therefore, data rates are not even throughout the cell and they vary from highest close to the cell center to lowest at the cell edge.

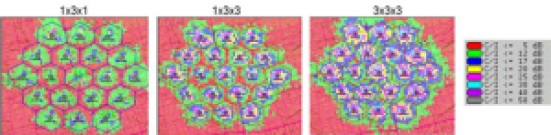

To improve the capacity of a cellular network, different frequencies are used on adjacent cell sites. This is called ‘frequency reuse factor.’ However, since LTE is designed to provide broadband services, it uses a wide channel bandwidth: 5, 10 or 20 MHz are the most common channel bandwidths (e.g. MetroPCS deployed 5 MHz system, Verizon deployed 10 MHz system and Telia Sonera 20 MHz system). This implies that standards frequency reuse as in traditional cellular networks is not possible due to lack of spectrum (e.g. Verizon’s 700 MHz spectrum is 2×11 MHz). So, LTE networks are essentially reuse 1 networks. At full loading, one can expect significant interference, especially at the cell edge. Dropping the modulation rate helps in mitigating interference at the cost of reduced capacity. To further improve LTE capacity, clever techniques in assigning sub-carriers of the OFDMA physical layer to users are deployed which I can address in a future posting. The figure below shows the distribution of signal quality in a small network of 19 three-sectored cells for different re-use plans. The right most plot is for a single frequency reuse and shows the lowest signal quality performance, while the plot in the middle and to the left are for three and nine channels, respectively, where we have better performance.

Interference also works against MIMO spatial multiplexing which requires good signal quality to operate. Hence, it is likely that in large percentage of the cell coverage area, MIMO-SM is not operational due to interference, which results in lower capacity. Consider that peak LTE rates include a MIMO-SM capacity gain factor of 2, so the downlink LTE throughput for a 20 MHz channel is about 150 Mbps, or a spectral efficiency (SE) of 7.5 b/s/Hz. Losing MIMO-SM halves the spectral efficiency down to 3.75 b/s/Hz.

The table below shows the average capacity for different LTE channelizations and corresponding spectral efficiency. Smaller channels have provide lower spectral efficiency that larger once because of control signaling overhead and loss of scheduling gain.

| Channel Bandwidth |

1.4 MHz |

3 MHz |

5 MHz |

10 MHz |

15 MHz |

20 MHz |

| SE Relative to 10 MHz |

62% |

83% |

99% |

100% |

100% |

103% |

| Average Capacity (Mbps) |

1.56 |

4.48 |

8.91 |

18 |

27 |

37.08 |

| Average UL Capacity (Mbps) |

0.8 |

2.1 |

3.6 |

8 |

11 |

16 |

To conclude, single channel LTE networks will offer an improvement in spectral efficiency over current 3G networks, but those expecting 150 Mbps download speeds will be disappointed. Network operators cannot plan their network capacity based on peak rates, but they will do so based on average rates which will be on the order of 1.4 – 1.8 b/s/Hz in the downlink.

This post would be much more clear if it showed the calculation to get from your peak data rate of about 42 mbps (no-MIMO) to your average data rate of about 9 mbps.

Specifically, if you supplied your assumptions for Interference and Network Loading.

Thanks

Jeff, the results are based on computer simulations with Release 8 system assumptions and UE category 4.

Frank